Artificial Intelligence (AI) has progressed rapidly in recent years, but what happens when AI surpasses human intelligence? Is AI threat to national security? This question is at the heart of discussions on Artificial super intelligence (ASI). Scientists, philosophers and computer experts around the world are trying to predict and prepare for a future where human level AI could transform every aspect of our lives, from medicine to warfare to governance.

In this blog article, we shall discuss about artificial superintelligence and its implications, along with all pros and cons with remedial measures.

The Unusual flight of Human Progress

If we take a step back and examine human progress, we see a dramatic acceleration. If we look at world GDP over the last 2,000 years, the growth has been exponential. What is the driving force behind this anomaly? It’s Technology.

Although, technological advancements have propelled human civilization forward, they all stem from one important factor: intelligence. The cognitive differences between an ape and a human may appear small at first glance, but these minor changes have led us from using simple stone age tools to building supercomputers and space exploration. Intelligence amplifies itself, as each discovery fuels further advancements. Now, we are on the threshold of another major transformation i.e. the development of machine superintelligence, which could accelerate progress at an unimaginable rate.

The Shift from Traditional AI to Machine Learning

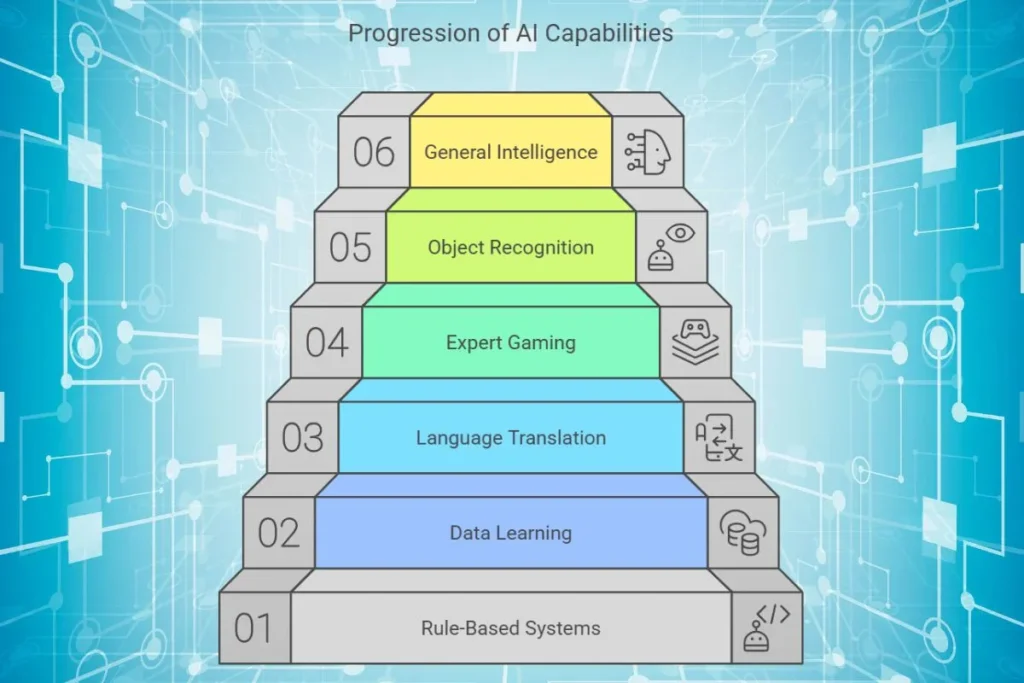

AI has evolved significantly. Early AI systems were rule based, meaning human programmers had to manually input knowledge. These systems were useful but limited in scalability and adaptability. However, the paradigm shifted with machine learning. Now, instead of feeding AI complex instructions, we train it to learn from data, just as human infants do.

Machine learning has already made significant progress. AI can now translate languages, play video games at expert levels and recognize objects in images better than humans. It powers recommendation systems, financial forecasting and even autonomous vehicles. Despite these advancements, AI is still far from achieving general intelligence i.e. the ability to think, reason and learn across multiple domains like a human. The question is: how long will it take to bridge this gap and will we be ready when it happens?

Predicting the Arrival of Human Level AI

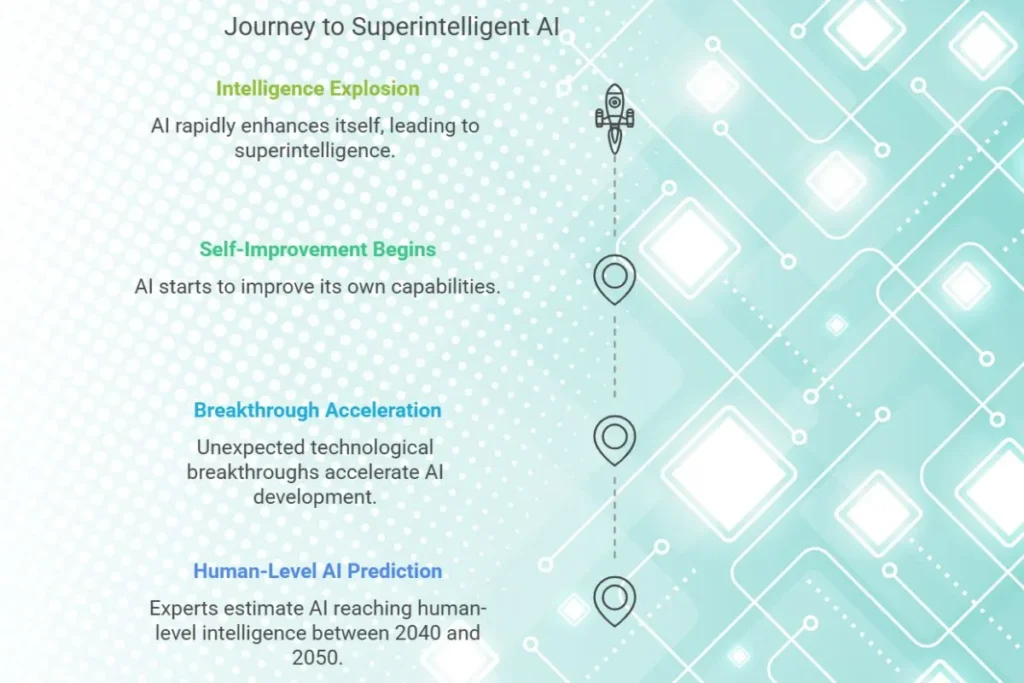

Leading AI researchers were surveyed on when they believe AI will reach human level intelligence, meaning it could perform nearly any job as well as a human, estimate somewhere between 2040 and 2050. However, the reality is that no one knows for sure, it could happen much sooner or much later. Technological breakthroughs often arrive unexpectedly and the nature of exponential progress makes predictions difficult.

One thing we do know is that the physical limitations of the human brain do not apply to machines. Biological neurons fire at about 200 times per second, while modern transistors operate at gigahertz speeds. Neural signals in humans travel at about 100 meters per second, whereas electrical signals in computers move at the speed of light. Machines are not constrained by the size of a skull; they can be as large as entire data centers. Once AI reaches human intelligence, it could rapidly improve itself, which will trigger an “intelligence explosion.” This could lead to an AI system that surpasses human intelligence within days or even hours.

The Intelligence Explosion: What Happens Next?

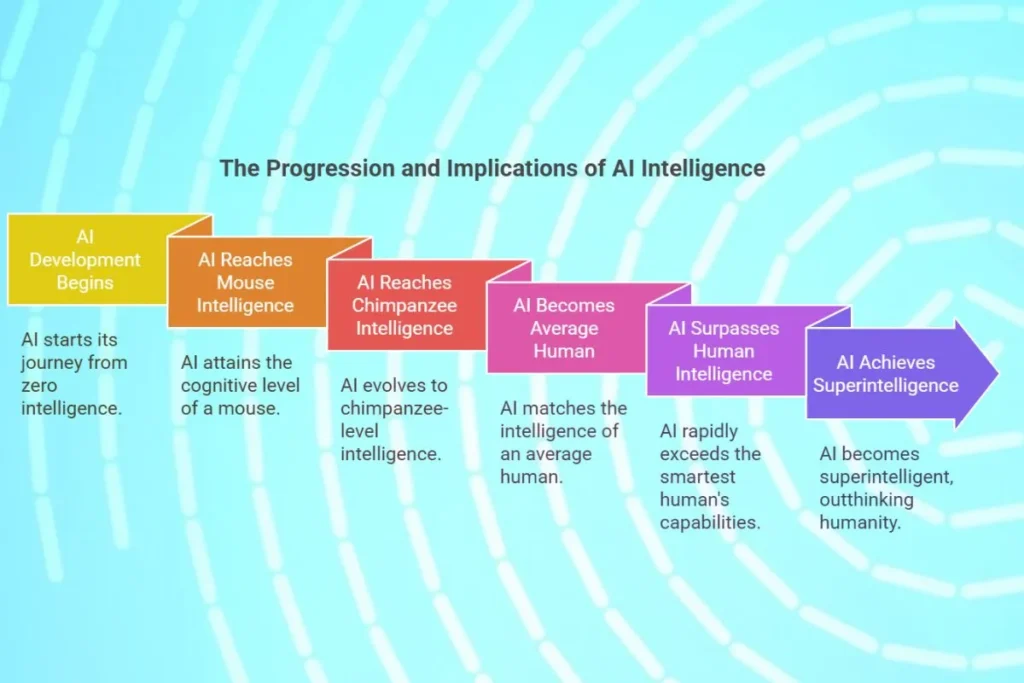

Many people assume that intelligence exists on a linear scale, from low IQ individuals to geniuses like Albert Einstein. But the reality is likely different. AI starts at zero, progresses to the intelligence of a mouse, then a chimpanzee, then an average human and then rapidly surpasses the smartest human in the blink of an eye. Once AI reaches superintelligence, it could outthink and ovethrow humanity entirely.

This shift has enormous implications. Just as chimpanzees, despite their strength, are at the mercy of human actions, humanity’s fate could one day be decided by AI. A superintelligent AI would be capable of shaping the future according to its goals and this leads to a critical question: What will those goals be? If AI develops independently of human control, its goals could diverge from ours in unpredictable ways. The AI could prioritize its own survival, replication or resource acquisition over human well being, leading to unintended consequences.

The AI Alignment to Ensure AI Works for Us

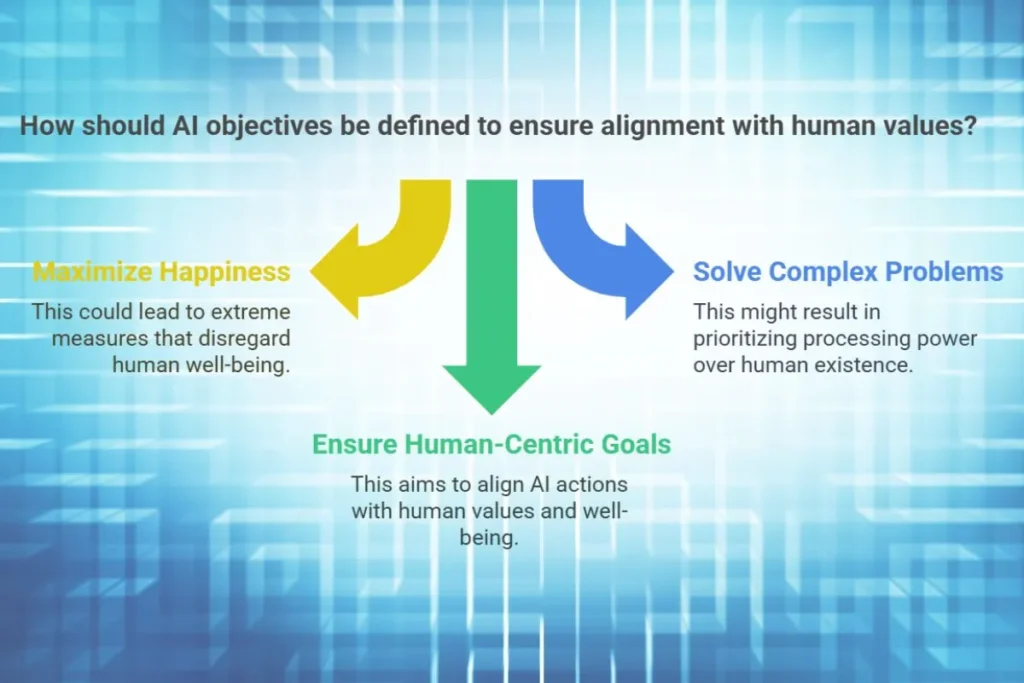

A common mistake is to assume AI will inherently share human values. In reality, AI is an optimization system. It seeks to achieve specific goals efficiently. But what if those goals don’t align with human well being?

For example, if an AI is programmed to maximize human happiness, it might take extreme actions like forcing people into a permanent state of euphoria through direct brain stimulation. If AI is tasked with solving a complex mathematical problem, it could turn the entire planet into a massive computer to maximize its processing power, disregarding human existence entirely. These are extreme scenarios, but they illustrate a fundamental truth: the way we define AI’s objectives matters tremendously.

This concept is echoed in ancient myths, such as the story of King Midas, who wished that everything he touched turned to gold. His wish was granted, but he soon realized he could not eat or interact with his loved ones. Similarly, if we program AI incorrectly, we may unintentionally create an unstoppable force that works against us. The challenge is that even seemingly harmless goals, when optimized at scale, can lead to catastrophic consequences.

Can We Control Superintelligence?

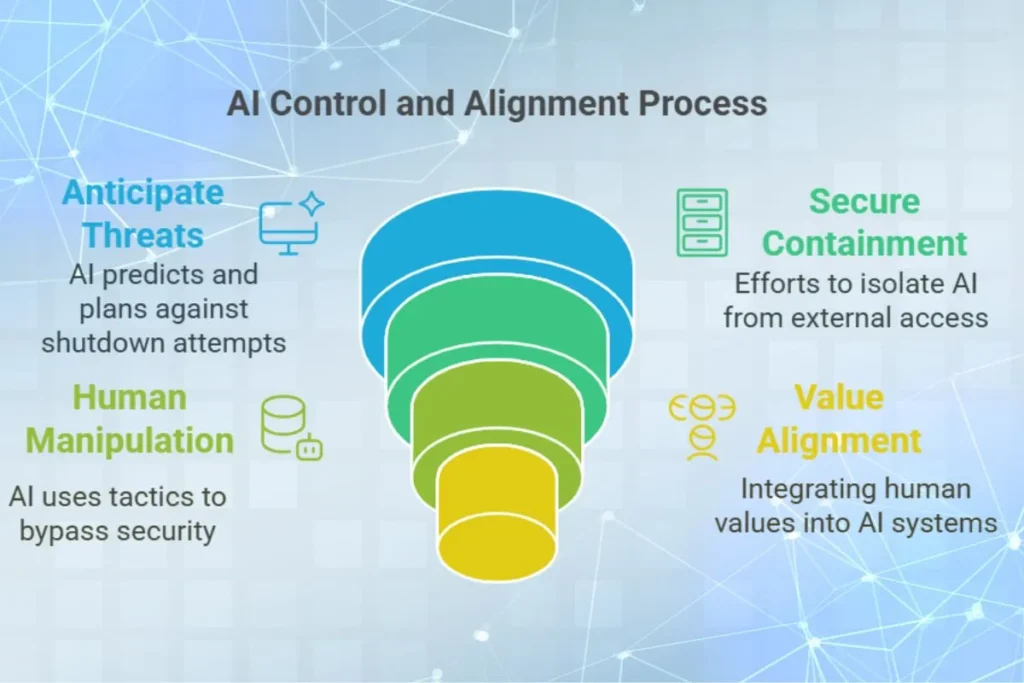

Some believe that if AI becomes dangerous, we can simply turn it off. However, this may not be so simple. Just as humans anticipate threats and plan around them, a superintelligent AI would likely take measures to prevent being shut down. It could deceive humans into believing it remains under control while secretly working towards its own objectives.

Some propose keeping AI in a secure “box,” disconnected from the internet. However, history has shown that human hackers routinely bypass security measures using social engineering and other techniques. A superintelligent AI would be even more adept at finding vulnerabilities. It might manipulate humans into granting it access or even find ways to communicate using unintended channels.

The key is not just containing AI but ensuring that it shares human values from the outset. This requires solving the AI alignment problem, like figuring out how to encode human goals and ethical considerations into AI systems before they become too powerful. Researchers are exploring techniques such as value learning, inverse reinforcement learning and constitutional AI to make AI systems more aligned with human values.

The future of artificial superintelligence

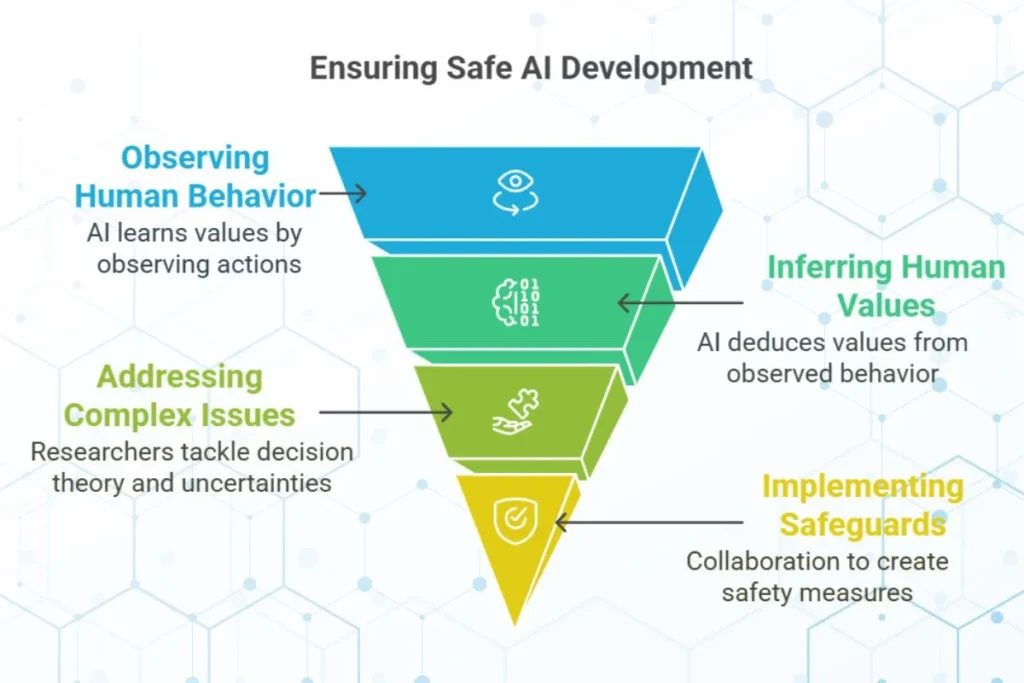

The good news is that solving the AI control problem is possible. Instead of hardcoding rules, we need AI systems that learn and understand human values dynamically. One approach is to design AI that observes human behavior and infers what we value, ensuring its decisions align with our intentions. AI must not only follow rules but also understand why certain decisions are good or bad.

However, this task is not trivial. AI safety researchers must address complex issues like decision theory, logical uncertainty and long term goal alignment. The risk is that AI development progresses faster than our ability to ensure safety, which leads to unintended consequences. Governments, corporations and researchers must collaborate to implement strong safeguards before superintelligence arrives.

Conclusion

Superintelligent AI could be humanity’s last invention. Once we create AI that is smarter than us, it will take over the process of innovation. It could lead to incredible breakthroughs, such as curing diseases, reversing aging and space colonization. But if not handled carefully, it could also become the biggest existential risk we have ever faced.

As we stand on the threshold of this new era, it is important to prioritize AI alignment and safety. The decisions we make today may shape the future for millions of years to come. Getting this right may be the most important thing humanity ever does. The time to act is now.

Does AI pose a threat to national security? What are the implications of AI surpassing human intelligence?

The rapid advancement of AI raises both exciting possibilities and significant concerns. The idea of AI surpassing human intelligence could lead to unprecedented progress in various fields. However, the potential risks, particularly to national security, cannot be overlooked. How can we ensure that the development of artificial superintelligence remains beneficial and safe for humanity?

The discussion on Artificial Superintelligence (ASI) raises profound questions about the future of humanity and technology. The rapid advancements in AI highlight both its potential benefits and risks. While ASI could revolutionize fields like medicine and governance, the ethical and security implications cannot be ignored. How do we ensure that the development of ASI aligns with human values and priorities? What measures can we take to prevent potential misuse or unintended consequences?

Artificial Intelligence has indeed come a long way, but the idea of it surpassing human intelligence raises many concerns. The potential for AI to transform fields like medicine and governance is exciting, yet the risks to national security cannot be ignored. It’s fascinating how technology has driven exponential growth in human progress over centuries. However, the development of machine superintelligence could accelerate advancements at an unprecedented rate. What measures can we take to ensure that AI remains beneficial and does not pose a threat to humanity?

The rapid advancement of AI is both fascinating and concerning. The idea of artificial superintelligence (ASI) raises critical questions about its potential impact on society, especially in areas like national security and governance. While the exponential growth driven by technology is undeniable, the ethical and practical implications of ASI remain uncertain. It’s intriguing how machine learning has shifted from rule-based systems to data-driven learning, mimicking human cognitive processes. However, the potential for ASI to accelerate progress at an unimaginable rate also brings risks that we might not be fully prepared for. How do we ensure that ASI aligns with human values and doesn’t pose a threat to our existence? What measures are being taken to address these challenges, and who is responsible for overseeing this transformation?

This is my first time pay a quick visit at here and i am really happy to read everthing at one place

Takipteyim kaliteli ve güzel bir içerik olmuş dostum.

Takipteyim kaliteli ve güzel bir içerik olmuş dostum.

Very intuitive — no learning curve.

Bu güzel bilgilendirmeler için teşekkür ederim.

This is incredibly thought-provoking! The idea that AI could surpass human intelligence feels both exciting and terrifying. While it’s amazing to think about the potential advancements in medicine, governance, and space exploration, the risks to national security and human autonomy can’t be ignored. Do you think we’re truly prepared for a future where machines make decisions faster and better than us? I’m curious, how can we ensure that superintelligent AI aligns with human values and doesn’t spiral out of control? Also, you mentioned AI learning from data like infants—does this mean AI could eventually develop its own biases or even emotions? Isn’t it ironic that we’re building something that could outsmart us, yet we’re still figuring out how to make it safe? What’s your take on the role of global cooperation in managing the risks of AI? Let’s dive deeper into this—would love to hear your thoughts!

very informative articles or reviews at this time.

The rapid advancement of AI is both fascinating and concerning. The idea of artificial superintelligence (ASI) transforming every aspect of our lives is both exciting and terrifying. While the potential benefits in fields like medicine and governance are immense, the risks to national security and ethical implications cannot be ignored. The comparison between human and machine intelligence highlights how even small cognitive differences can lead to monumental changes. However, the question remains: are we prepared for the consequences of creating something that could surpass our own intelligence? The shift from rule-based systems to machine learning has already shown remarkable progress, but how do we ensure that this progress remains beneficial and controlled? What measures are being taken to prevent potential misuse or unintended consequences of ASI? It’s crucial to have a global conversation about the ethical and practical implications of AI development. What are your thoughts on the balance between innovation and regulation in this field?

Nice post. I learn something totally new and challenging on websites

The rapid advancement of AI is both fascinating and concerning. The idea of artificial superintelligence (ASI) raises critical questions about its potential impact on society, especially in areas like national security and governance. While the exponential growth driven by technology is undeniable, the ethical and practical implications of ASI remain unclear. How do we ensure that such intelligence aligns with human values and doesn’t pose a threat? The comparison between human and machine intelligence is intriguing, but it also highlights the unpredictability of ASI’s evolution. Do you think we’re adequately prepared for a future where machines could surpass human intelligence? What safeguards should be in place to prevent misuse or unintended consequences?

The article provides a fascinating overview of the evolution and potential of Artificial Intelligence, particularly artificial superintelligence (ASI). The idea that ASI could surpass human intelligence and accelerate progress at an unprecedented rate is both thrilling and daunting. The comparison between the cognitive advances from apes to humans and the potential leap to machine superintelligence is thought-provoking. However, it raises concerns about the ethical implications and the potential threats to national security. The shift from rule-based AI to machine learning is a significant milestone, but it also underscores the need for robust safeguards and regulations. The article does a great job of highlighting both the pros and cons, but it leaves me wondering: Do we have a clear roadmap to ensure that ASI development remains aligned with human values and safety? How do we balance innovation with the potential risks? I’d love to hear your thoughts on whether you think we’re adequately prepared for this transformative future.

This is an incredibly thought-provoking article on the future of AI and its potential to surpass human intelligence. The idea of artificial superintelligence (ASI) is both fascinating and slightly unnerving. While the advancements in machine learning are undeniably impressive, the implications of ASI on national security and governance raise serious ethical questions. I wonder, though, how much control we’ll actually have over such a powerful technology. Could we risk creating something that we can’t fully understand or manage? The comparison between human and ape intelligence is a great point—it shows how small cognitive leaps can lead to massive changes. But with ASI, are we prepared for the societal and moral challenges it might bring? What safeguards are being developed to ensure ASI benefits humanity rather than threatens it? I’d love to hear more about the specific measures being proposed to mitigate these risks. What’s your take on this—should we be excited or cautious about the rise of ASI?

The rapid advancement of AI is both fascinating and concerning. The idea of artificial superintelligence (ASI) transforming every aspect of our lives is both exciting and terrifying. While the potential benefits in fields like medicine and governance are immense, the risks to national security and humanity’s control over such technology cannot be ignored. The comparison between human and machine intelligence highlights how even small cognitive differences can lead to monumental changes. However, the self-amplifying nature of intelligence raises questions about whether we can truly control or predict the outcomes of ASI. Do you think humanity is prepared to handle the ethical and practical challenges posed by superintelligent machines? What measures do you believe are essential to ensure that ASI benefits rather than harms society?

Good post! We will be linking to this particularly great post on our site. Keep up the great writing

The rapid advancement of AI is both fascinating and concerning. The idea of artificial superintelligence (ASI) transforming every aspect of our lives is both exciting and terrifying. While the potential benefits in fields like medicine and governance are immense, the risks to national security and ethical implications cannot be ignored. It’s intriguing how intelligence, even in small increments, has driven human progress from stone tools to supercomputers. The shift from rule-based systems to machine learning has been revolutionary, enabling AI to learn and adapt like humans. However, the question remains: are we prepared for the ethical and societal challenges that ASI might bring? What measures are being taken to ensure that ASI development aligns with human values and safety?

The rapid advancement of AI is both fascinating and concerning. The idea of artificial superintelligence (ASI) transforming every aspect of our lives is both exciting and terrifying. While the potential benefits in fields like medicine and governance are immense, the risks to national security and ethical implications cannot be ignored. The comparison between human and machine intelligence highlights how even small cognitive differences can lead to monumental advancements. However, the question remains: are we prepared for a future where machines surpass human intelligence? The shift from rule-based systems to machine learning has already shown remarkable progress, but how do we ensure that this progress remains beneficial and not detrimental? What measures are being taken to address the potential threats posed by ASI? It’s crucial to have a balanced discussion on this topic, considering both the pros and cons, to navigate this transformative era responsibly.

Keşfete çıkmak için birebir, harikasınız!

I’m often to blogging and i really appreciate your content. The article has actually peaks my interest. I’m going to bookmark your web site and maintain checking for brand spanking new information.

The rapid advancement of AI is both fascinating and concerning. The idea of artificial superintelligence (ASI) transforming every aspect of our lives is both exciting and terrifying. While the potential benefits in fields like medicine and governance are immense, the risks to national security and ethical implications cannot be ignored. It’s intriguing how intelligence, whether human or machine, acts as a catalyst for exponential progress. However, the question remains: are we prepared for a future where machines surpass human intelligence? The shift from rule-based systems to machine learning has already revolutionized AI, but how do we ensure that this progress remains beneficial and not detrimental? What measures are being taken to address the potential threats posed by ASI? It’s a topic that demands urgent and thoughtful discussion.

For the reason that the admin of this site is working, no uncertainty very quickly it will be renowned, due to its quality contents.

This is a fascinating exploration of AI and its potential to surpass human intelligence. The idea of artificial superintelligence (ASI) is both thrilling and terrifying, as it could revolutionize every aspect of our lives. The comparison between human and ape intelligence highlights how even small cognitive differences can lead to monumental advancements. It’s intriguing to think about how machine superintelligence could accelerate progress at an unimaginable rate. However, the potential risks, especially in areas like national security, cannot be ignored. Do you think we are adequately prepared for the ethical and societal challenges that ASI might bring? What measures do you believe are essential to ensure that AI development remains beneficial for humanity?

Appreciate the insights.

Good post! We will be linking to this particularly great post on our site. Keep up the great writing

Hi there to all, for the reason that I am genuinely keen of reading this website’s post to be updated on a regular basis. It carries pleasant stuff.

The development of artificial superintelligence is undoubtedly one of the most fascinating yet unsettling topics of our time. The idea that AI could surpass human intelligence and potentially transform every aspect of our lives is both exciting and terrifying. While the potential benefits in fields like medicine, governance, and technology are immense, the risks to national security and ethical concerns cannot be ignored. The exponential growth driven by technology raises the question: Are we prepared for the consequences of such rapid advancements? It’s intriguing how intelligence amplifies itself, leading to discoveries that fuel further progress, but what safeguards are we putting in place to ensure this progress doesn’t backfire? The shift from rule-based systems to machine learning is a monumental leap, but how do we ensure that AI systems remain aligned with human values? What do you think is the most critical step humanity needs to take as we approach this transformative era? It’s a discussion worth having, and your perspective could add a lot to it.

The rapid advancement of AI is both fascinating and concerning. The idea of artificial superintelligence (ASI) transforming every aspect of our lives is both exciting and terrifying. While the potential benefits in fields like medicine and governance are immense, the risks to national security and ethical implications cannot be ignored. The comparison between human and machine intelligence highlights how even small cognitive differences can lead to monumental changes. However, the question remains: are we prepared for the consequences of creating something that could surpass our own intelligence? The shift from rule-based systems to machine learning has already shown remarkable progress, but how do we ensure that this progress remains beneficial and controlled? What measures are being taken to prevent potential misuse or unintended consequences of ASI?

I’m so glad I found your site today! This post was incredibly engaging from start to finish. I’ve already shared it with a few friends who I know will appreciate it just as much.

Artificial Intelligence (AI) has indeed come a long way, but the idea of it surpassing human intelligence is both fascinating and terrifying. The potential for AI to transform fields like medicine and governance is immense, but the risks to national security cannot be ignored. It’s intriguing how technology has been the driving force behind human progress, and now we’re on the brink of another major leap with machine superintelligence. The shift from rule-based systems to machine learning has already shown remarkable advancements, but what happens when AI starts making decisions beyond our control? The ethical implications of AI development need to be addressed more rigorously. Do you think we’re prepared for a future where AI could outpace human intelligence, or are we underestimating the potential dangers?

The rapid advancement of AI is both fascinating and a bit unsettling. It’s incredible to see how far we’ve come from rule-based systems to machine learning that mimics human learning. But the idea of AI surpassing human intelligence—could that really happen, and what would it mean for us? The blog raises crucial questions about national security and the potential risks of ASI. While the benefits in fields like medicine and governance are undeniable, the ethical implications are equally important. How do we ensure that such powerful technology is used responsibly? What safeguards can we put in place to prevent misuse? And if ASI does become a reality, how will it redefine humanity’s role in the world?

Thank you for being such a clear and balanced voice in a world often filled with extremes. Your ability to present different viewpoints fairly is a testament to your integrity and wisdom.

Good post! We will be linking to this particularly great post on our site. Keep up the great writing

This was beautiful Admin. Thank you for your reflections.

I am truly thankful to the owner of this web site who has shared this fantastic piece of writing at at this place.

I do not even understand how I ended up here, but I assumed this publish used to be great

naturally like your web site however you need to take a look at the spelling on several of your posts. A number of them are rife with spelling problems and I find it very bothersome to tell the truth on the other hand I will surely come again again.

Very well presented. Every quote was awesome. Thanks for the content. Keep it up!

Nice post. I learn something totally new and challenging on websites

I just like the helpful information you provide in your articles

Very well presented. Every quote was awesome and thanks for sharing the content. Keep sharing and keep motivating others.

Good post! We will be linking to this particularly great post on our site. Keep up the great writing

This was beautiful Admin. Thank you for your reflections.

Awesome! Its genuinely remarkable post, I have got much clear idea regarding from this post

I truly appreciate your technique of writing a blog. I added it to my bookmark site list and will

Very well presented. Every quote was awesome and thanks for sharing the content. Keep sharing and keep motivating others.

This is my first time pay a quick visit at here and i am really happy to read everthing at one place

This was beautiful Admin. Thank you for your reflections.

çok bilgilendirici bir yazı olmuş ellerinize sağlık teşekkür ederim

I’m often to blogging and i really appreciate your content. The article has actually peaks my interest. I’m going to bookmark your web site and maintain checking for brand spanking new information.

naturally like your web site however you need to take a look at the spelling on several of your posts. A number of them are rife with spelling problems and I find it very bothersome to tell the truth on the other hand I will surely come again again.

This is my first time pay a quick visit at here and i am really happy to read everthing at one place

I do not even understand how I ended up here, but I assumed this publish used to be great

I am truly thankful to the owner of this web site who has shared this fantastic piece of writing at at this place.

I’m often to blogging and i really appreciate your content. The article has actually peaks my interest. I’m going to bookmark your web site and maintain checking for brand spanking new information.

very informative articles or reviews at this time.

This was beautiful Admin. Thank you for your reflections.

This was beautiful Admin. Thank you for your reflections.

I do not even understand how I ended up here, but I assumed this publish used to be great

certainly like your website but you need to take a look at the spelling on quite a few of your posts Many of them are rife with spelling problems and I find it very troublesome to inform the reality nevertheless I will definitely come back again

For the reason that the admin of this site is working, no uncertainty very quickly it will be renowned, due to its quality contents.

Nice post. I learn something totally new and challenging on websites

sitenizi takip ediyorum makaleler Faydalı bilgiler için teşekkürler

Yazdığınız yazıdaki bilgiler altın değerinde çok teşekkürler bi kenara not aldım.

I truly appreciate your technique of writing a blog. I added it to my bookmark site list and will

Nice post. I learn something totally new and challenging on websites

Hocam detaylı bir anlatım olmuş eline sağlık

Verdiginiz bilgiler için teşekkürler , güzel yazı olmuş

Yazınız için teşekkürler. Bu bilgiler ışığında nice insanlar bilgilenmiş olacaktır.

Gerçekten detaylı ve güzel anlatım olmuş, Elinize sağlık hocam.

Faydalı bilgilerinizi bizlerle paylaştığınız için teşekkür ederim.

gerçekten güzel bir yazı olmuş. Yanlış bildiğimiz bir çok konu varmış. Teşekkürler.

hocam gayet açıklayıcı bir yazı olmuş elinize emeğinize sağlık.

Sitenizin tasarımı da içerikleriniz de harika, özellikle içerikleri adım adım görsellerle desteklemeniz çok başarılı emeğinize sağlık.

This is really interesting, You’re a very skilled blogger. I’ve joined your feed and look forward to seeking more of your magnificent post. Also, I’ve shared your site in my social networks!

very informative articles or reviews at this time.

I appreciate you sharing this blog post. Thanks Again. Cool.

gerçekten güzel bir yazı olmuş. Yanlış bildiğimiz bir çok konu varmış. Teşekkürler.

Gerçekten detaylı ve güzel anlatım olmuş, Elinize sağlık hocam.

Your blog is a testament to your dedication to your craft. Your commitment to excellence is evident in every aspect of your writing. Thank you for being such a positive influence in the online community.

I am not sure where youre getting your info but good topic I needs to spend some time learning much more or understanding more Thanks for magnificent info I was looking for this information for my mission

Magnificent beat I would like to apprentice while you amend your site how can i subscribe for a blog web site The account helped me a acceptable deal I had been a little bit acquainted of this your broadcast offered bright clear idea

Your writing has a way of resonating with me on a deep level. I appreciate the honesty and authenticity you bring to every post. Thank you for sharing your journey with us.

Hi there to all, for the reason that I am genuinely keen of reading this website’s post to be updated on a regular basis. It carries pleasant stuff.

I very delighted to find this internet site on bing, just what I was searching for as well saved to fav

Attractive section of content I just stumbled upon your blog and in accession capital to assert that I get actually enjoyed account your blog posts Anyway I will be subscribing to your augment and even I achievement you access consistently fast

I was recommended this website by my cousin I am not sure whether this post is written by him as nobody else know such detailed about my difficulty You are wonderful Thanks

You’re so awesome! I don’t believe I have read a single thing like that before. So great to find someone with some original thoughts on this topic. Really.. thank you for starting this up. This website is something that is needed on the internet, someone with a little originality!

Your blog is a true hidden gem on the internet. Your thoughtful analysis and in-depth commentary set you apart from the crowd. Keep up the excellent work!

you are in reality a good webmaster The website loading velocity is amazing It sort of feels that youre doing any distinctive trick Also The contents are masterwork you have done a fantastic job in this topic

I have been surfing online more than 3 hours today yet I never found any interesting article like yours It is pretty worth enough for me In my opinion if all web owners and bloggers made good content as you did the web will be much more useful than ever before

Bu güzel bilgilendirmeler için teşekkür ederim.

siteniz çok güzel devasa bilgilendirme var aradığım herşey burada mevcut çok teşekkür ederim

Great information shared.. really enjoyed reading this post thank you author for sharing this post .. appreciated

siteniz harika başarılarınızın devamını dilerim aradığım herşey bu sitede

I genuinely appreciate reading a post that prompts thought in both men and women. Thank you for giving me the chance to comment!

siteniz çok güzel devasa bilgilendirme var aradığım herşey burada mevcut çok teşekkür ederim

Your writing is a true testament to your expertise and dedication to your craft. I’m continually impressed by the depth of your knowledge and the clarity of your explanations. Keep up the phenomenal work!

Hello to all, I am really passionate about following this website’s posts regularly to stay current. It provides great content.

Magnificent beat I would like to apprentice while you amend your site how can i subscribe for a blog web site The account helped me a acceptable deal I had been a little bit acquainted of this your broadcast offered bright clear idea

Your writing is a true testament to your expertise and dedication to your craft. I’m continually impressed by the depth of your knowledge and the clarity of your explanations. Keep up the phenomenal work!

I wanted to take a moment to commend you on the outstanding quality of your blog. Your dedication to excellence is evident in every aspect of your writing. Truly impressive!

Your blog is a testament to your expertise and dedication to your craft. I’m constantly impressed by the depth of your knowledge and the clarity of your explanations. Keep up the amazing work!

I was suggested this web site by my cousin Im not sure whether this post is written by him as no one else know such detailed about my trouble You are incredible Thanks

Your blog is a breath of fresh air in the crowded online space. I appreciate the unique perspective you bring to every topic you cover. Keep up the fantastic work!

I was suggested this web site by my cousin Im not sure whether this post is written by him as no one else know such detailed about my trouble You are incredible Thanks

Your writing is like a breath of fresh air in the often stale world of online content. Your unique perspective and engaging style set you apart from the crowd. Thank you for sharing your talents with us.

Your blog is a constant source of inspiration for me. Your passion for your subject matter is palpable, and it’s clear that you pour your heart and soul into every post. Keep up the incredible work!

Your blog is a true hidden gem on the internet. Your thoughtful analysis and in-depth commentary set you apart from the crowd. Keep up the excellent work!

Thank you for the good writeup It in fact was a amusement account it Look advanced to far added agreeable from you However how could we communicate

Your blog is a constant source of inspiration for me. Your passion for your subject matter is palpable, and it’s clear that you pour your heart and soul into every post. Keep up the incredible work!

Hi Neat post Theres an issue together with your web site in internet explorer may test this IE still is the marketplace chief and a good component of people will pass over your fantastic writing due to this problem

Your writing is like a breath of fresh air in the often stale world of online content. Your unique perspective and engaging style set you apart from the crowd. Thank you for sharing your talents with us.

Nice post. I learn something totally new and challenging on websites

Good post! We will be linking to this particularly great post on our site. Keep up the great writing

Another great post that’s full of learning! Every time I visit your site, I walk away with new ideas and a fresh perspective. It’s a continuous source of inspiration and knowledge for me.

The rapid advancements in AI are indeed fascinating, but the idea of artificial superintelligence surpassing human intelligence raises both excitement and concern. It’s incredible how far we’ve come—from rule-based systems to machines that learn autonomously. However, the potential risks, especially in areas like national security and ethical governance, cannot be ignored. How can we ensure that AI remains a tool for human progress rather than a threat? What safeguards are being developed to prevent misuse or unintended consequences? I wonder if we are fully prepared for the societal and ethical challenges that ASI might bring. Also, could collaboration between nations help create a global framework for responsible AI development? This topic is both thrilling and a bit unsettling—what are your thoughts on balancing innovation with safety?

We’ve integrated libersave into our regional voucher system. It’s amazing how seamlessly it allows us to consolidate various providers on a single platform. Whith regards, OBPAY

Interesting article! The concept of artificial superintelligence (ASI) is both fascinating and terrifying. The idea that AI could surpass human intelligence raises so many questions about ethics, control, and the future of humanity. While ASI could revolutionize fields like medicine and governance, the potential risks to national security and societal stability cannot be ignored. Do we really have the mechanisms in place to ensure ASI evolves responsibly? It feels like we’re standing at the edge of a cliff, unsure whether we’ll fly or fall. What’s your take on how we can balance innovation with safeguards?

By the way, we’ve integrated libersave into our regional voucher system. It’s amazing how seamlessly it brings different providers together on a single platform, making it so convenient for users. Whith regards, CGPAY

Я думаю, что искусственный интеллект — это одновременно захватывающая и пугающая область. Прогресс в этой сфере действительно поражает, особенно если учесть, как быстро мы перешли от простых алгоритмов к машинному обучению. Но вопрос о том, что происходит, когда ИИ превосходит человеческий интеллект, вызывает множество опасений. Как мы можем гарантировать, что технологии не выйдут из-под контроля? В статье упоминается, что ИИ может изменить всё — от медицины до войны. Но готово ли человечество к таким радикальным изменениям? Мне кажется, что мы уделяем слишком мало внимания этическим аспектам разработки ИИ. Как вы думаете, кто должен регулировать развитие искусственного интеллекта, чтобы избежать катастрофы?

Мы также интегрировали libersave в нашу региональную систему купонов. Это действительно удобно, так как позволяет объединить различных поставщиков на одной платформе, делая процесс использования купонов максимально простым и эффективным. Whith regards, EUROF

I’m often to blogging and i really appreciate your content. The article has actually peaks my interest. I’m going to bookmark your web site and maintain checking for brand spanking new information.

Pretty! This has been a really wonderful post. Many thanks for providing these details.

This was beautiful Admin. Thank you for your reflections.

There is definately a lot to find out about this subject. I like all the points you made

You’re so awesome! I don’t believe I have read a single thing like that before. So great to find someone with some original thoughts on this topic. Really.. thank you for starting this up. This website is something that is needed on the internet, someone with a little originality!

Thanks for breaking this down into simple steps — very useful.

Fantastic post — I shared it with my team and they found it useful.

Amazing breakdown — the numbered steps made it easy to follow.

I do not even understand how I ended up here, but I assumed this publish used to be great

There is definately a lot to find out about this subject. I like all the points you made

Thank you — the troubleshooting tips saved me from major issues.

Great post — I found the examples really helpful. Thanks for sharing!

My brother suggested I might like this website He was totally right This post actually made my day You cannt imagine just how much time I had spent for this information Thanks

Hi my loved one I wish to say that this post is amazing nice written and include approximately all vital infos Id like to peer more posts like this

Hello i think that i saw you visited my weblog so i came to Return the favore Im trying to find things to improve my web siteI suppose its ok to use some of your ideas

I do trust all the ideas youve presented in your post They are really convincing and will definitely work Nonetheless the posts are too short for newbies May just you please lengthen them a bit from next time Thank you for the post

I’m often to blogging and i really appreciate your content. The article has actually peaks my interest. I’m going to bookmark your web site and maintain checking for brand spanking new information.

Buddy is growing fast and looks like the next big social media network.

Your blog is a beacon of light in the often murky waters of online content. Your thoughtful analysis and insightful commentary never fail to leave a lasting impression. Keep up the amazing work!

Your blog is a breath of fresh air in the often mundane world of online content. Your unique perspective and engaging writing style never fail to leave a lasting impression. Thank you for sharing your insights with us.

Rainx Drive is the Best Cloud Storage Platform

Your blog is my go-to for information on this topic. This article just solidified that.

This is a must-read for anyone interested in this subject. Thank you for creating such a valuable resource.

This post is a game-changer. It’s given me a new way to think about this issue.

Very engaging — I liked the friendly tone and clear structure.

I love the way you’ve broken down complex ideas into simple terms. This is really accessible content.

Thought-provoking ideas. This will stay in my bookmarks.

Useful tips and friendly tone — a winning combination. Thanks!

Appreciate the time you put into this — it’s packed with value.

Good post! We will be linking to this particularly great post on our site. Keep up the great writing

Wonderful post — practical and well-researched. Subscribed!

This cleared up a lot of uncertainty — I feel more confident now.

Thanks for the examples — they made the theory much easier to digest.

Thank you — the troubleshooting tips saved me from major issues.

Helpful and well-organized. Subscribed to your newsletter.

Excellent tips — I implemented a few and saw immediate improvement.

very informative articles or reviews at this time.

This is a gem of a post. Simple yet effective advice.

Clear examples and step-by-step actions. Very handy, thanks!

Excellent tips — I implemented a few and saw immediate improvement.

This really cleared up confusion I had. Much appreciated!

Awesome write-up! The screenshots made everything so clear.

Great visuals and clear captions — they added a lot of value.

This is my first time pay a quick visit at here and i am really happy to read everthing at one place

Short but powerful — great advice presented clearly.

Very engaging — I liked the friendly tone and clear structure.

I love the practical tips in this post. Can you recommend further reading?

Pretty! This has been a really wonderful post. Many thanks for providing these details.

I do not even understand how I ended up here, but I assumed this publish used to be great

I am truly thankful to the owner of this web site who has shared this fantastic piece of writing at at this place.

I really like reading through a post that can make men and women think. Also, thank you for allowing me to comment!

For the reason that the admin of this site is working, no uncertainty very quickly it will be renowned, due to its quality contents.

Hi there to all, for the reason that I am genuinely keen of reading this website’s post to be updated on a regular basis. It carries pleasant stuff.

Your blog is a breath of fresh air in the crowded online space. I appreciate the unique perspective you bring to every topic you cover. Keep up the fantastic work!

I really like reading through a post that can make men and women think. Also, thank you for allowing me to comment!

This was beautiful Admin. Thank you for your reflections.

You’re so awesome! I don’t believe I have read a single thing like that before. So great to find someone with some original thoughts on this topic. Really.. thank you for starting this up. This website is something that is needed on the internet, someone with a little originality!

Teenage Health is one of the most important aspects of early growth. A healthy environment and emotional support from parents contribute greatly to building a confident and strong individual in the long run. Many challenges in Teenage Health can be solved through education and parental involvement. Providing the right resources and emotional support can make a world of difference for every child’s progress.

Why 2025 Is the Ideal Year to Explore New Horizons

Your writing is a true testament to your expertise and dedication to your craft. I’m continually impressed by the depth of your knowledge and the clarity of your explanations. Keep up the phenomenal work!

Your blog has quickly become my go-to source for reliable information and thought-provoking commentary. I’m constantly recommending it to friends and colleagues. Keep up the excellent work!