Artificial Neural Networks (ANN) are the main part of modern machine learning and artificial intelligence. They power machines to make predictions, recognize patterns and solve complex problems. Inspired by the human brain’s structure, how artificial neural networks learn from data to make decisions in a way that closely copies the human intelligence.

In this article, we shall discuss what is artificial neural network and explain how artificial neural networks work. Also we shall discuss artificial neural network definition, their structure, types and practical applications in various industries. By the end, you shall have a solid understanding of artificial neural networks and how they impact our lives.

How Artificial Neural Networks Work

The structure of an ANN is inspired by the biological neural network of the human brain, where neurons are interconnected to transmit and process signals. Similarly, artificial neurons (also called nodes or perceptrons) in a neural network process information and pass it on. Here is a breakdown of how they work.

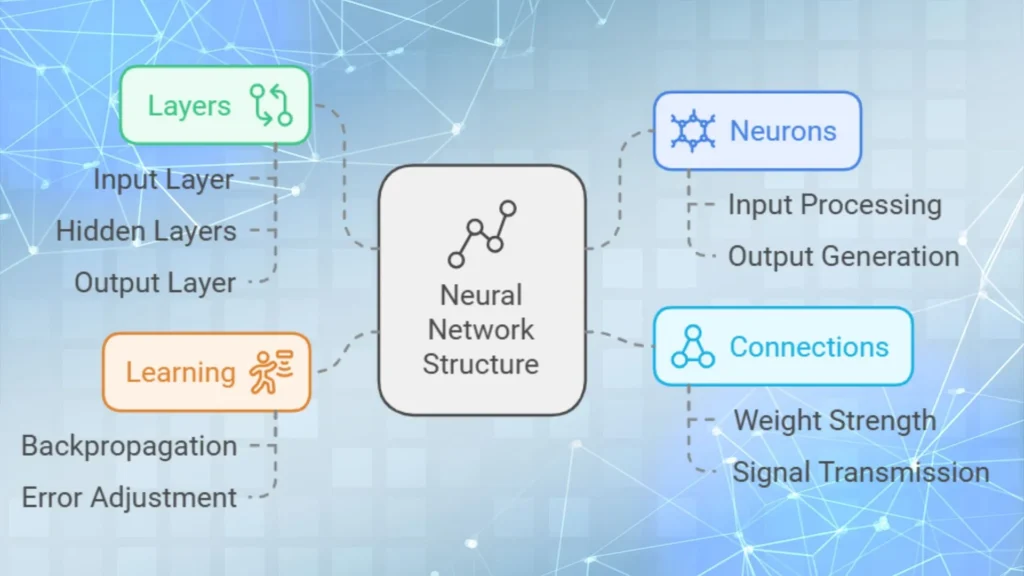

How Neural Networks Are Structured

1. Neurons: In the brain, neurons are cells that receive, process and transmit information. In ANNs, neurons are represented as nodes that process inputs and produce an output.

2. Layers: Like a network of neurons in the brain, an ANN consists of multiple layers of interconnected nodes. The primary types of layers are:

– Input Layer: The input layer receives data in the form of features (e.g., pixels in an image, words in a sentence). Each feature is assigned to a node.

– Hidden Layers: Between the input and output layers, neural networks have one or more hidden layers. These layers perform calculations on the input data to learn patterns. Each node in a hidden layer is connected to nodes in the previous and next layers, creating a web of interconnected nodes.

– Output Layer: The output layer provides the final prediction or classification based on the calculations in the hidden layers.

3. Connections: Just as neurons are connected by synapses, nodes in ANNs are connected by weights. These weights determine the strength of the signal transmitted from one node to another.

4. Learning: Through a process called back-propagation, ANNs adjust their weights based on errors in the predictions, gradually learning to make more accurate predictions.

How Information Flows Through a Neural Network

Neural networks use a process called forward propagation, where data flows from the input layer through hidden layers to the output. Each node processes information, applies weights and biases and passes it to the next node. During training, the network adjusts these weights and biases to minimize error and improve accuracy, a process called back-propagation.

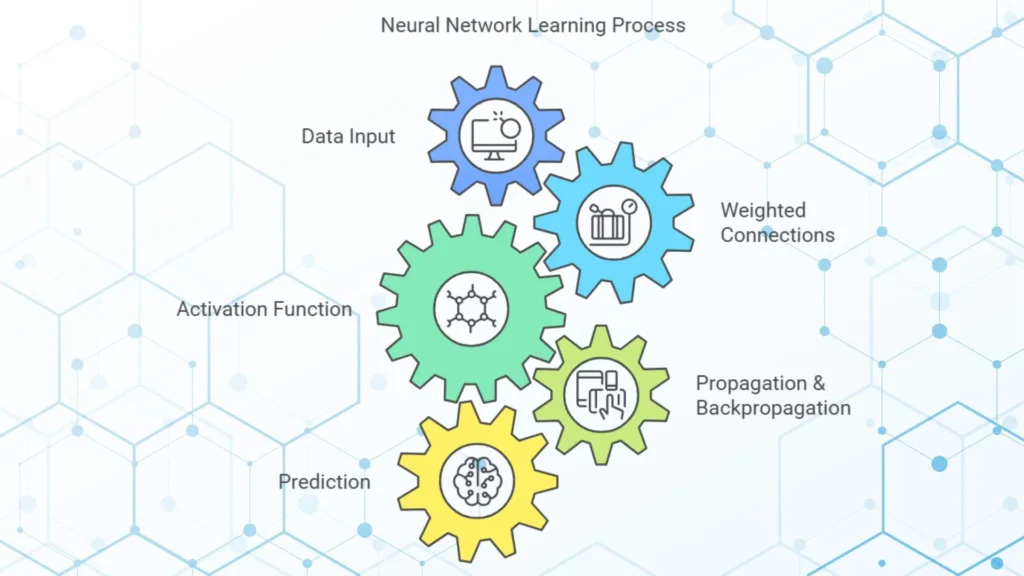

How Neural Networks Work

Let us understand the process of how a neural network learns and makes predictions:

1. Data Input: Data is fed into the input layer. For example, in image recognition, each pixel’s value in an image would be an input.

2. Weighted Connections: Each input is assigned a weight, representing the strength or importance of that input.

3. Activation Function: After the data passes through the weighted connections, it goes through an activation function in the nodes. The activation function helps the network to decide whether a neuron should be activated or not.

4. Propagation and Back-propagation: The network calculates an output, compares it to the actual result and then adjusts the weights in the connections through back-propagation. This process continues until the network learns to produce accurate results.

5. Prediction: Once trained, the neural network can make predictions on new data.

Key Concepts in Neural Networks

– Activation Function: Determines whether a neuron should be activated based on the inputs. Common activation functions include ReLU, sigmoid and tanh.

– Weights and Biases: Weights determine the importance of each input, while biases adjust the output. Together, they help the network to learn and improve.

– Loss Function: Measures how far the predicted output is from the actual output, which helps the network to adjust weights during training.

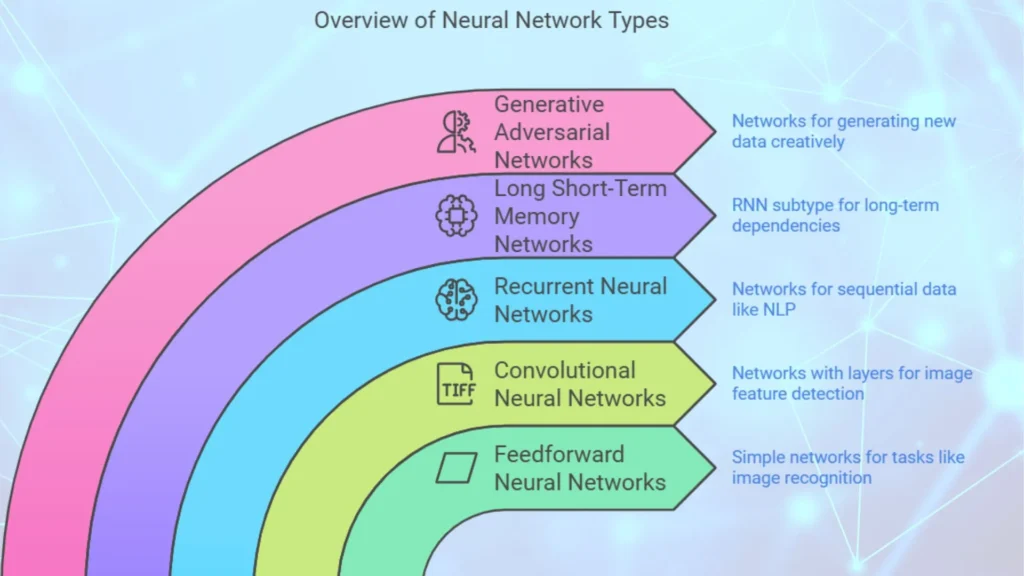

Types of Neural Networks

There are various types of neural networks, each suited to different tasks. Let us look at the most common types.

1. Feedforward Neural Networks (FNNs)

Feedforward Neural Networks (FNNs) is the simplest type of neural network, where information flows in one direction, from input to output. Feedforward networks are often used for tasks like image and speech recognition.

2. Convolutional Neural Networks (CNNs)

Convolutional Neural Networks (CNNs) are primarily used for image recognition and computer vision tasks. They have specialized layers called convolutional layers, which detect features like edges, textures and patterns in images. CNNs are commonly used in applications like facial recognition and autonomous driving.

Example: Google Photos uses CNNs to classify and tag images based on their content.

For instance, a CNN can detect whether an image contains a dog, cat, car, etc.

3. Recurrent Neural Networks (RNNs)

Recurrent Neural Networks (RNNs) are designed to handle sequential data, making them ideal for time series analysis and natural language processing (NLP). Unlike feedforward networks, RNNs have connections that loop back, which enables them to ‘remember’ previous inputs.

Example: RNNs power predictive text in smartphones and real time language translation. RNNs are commonly used in natural language processing tasks, such as sentiment analysis, language translation and speech recognition.

4. Long Short-Term Memory Networks (LSTMs)

Long Short-Term Memory Networks (LSTMs) is a subtype of RNNs, LSTMs are particularly good at capturing long term dependencies in sequential data. LSTMs are used for tasks like speech recognition and text generation, where context over a longer sequence is important.

5. Generative Adversarial Networks (GANs)

Generative Adversarial Networks (GANs) consist of two neural networks, a generator and a discriminator, that work together to generate new data. They are popular in creative fields, used to create realistic images, music and even video game characters.

Example: GANs are used to generate realistic-looking faces in applications like Deepfake.

How Neural Networks Learn

Neural networks ‘learn’ through a process called training, where they analyze large amounts of labeled data and adjust their internal parameters to improve accuracy. Here is how the learning process works:

1. Forward Propagation: The input data moves through the layers and the network makes a prediction.

2. Loss Calculation: The prediction is compared to the actual output using a loss function, which measures the difference.

3. Backpropagation: The network adjusts weights and biases based on the loss, ‘learning’ from mistakes.

4. Optimization: An algorithm like gradient descent minimizes the loss, which helps the network to improve.

This cycle repeats until the network reaches an acceptable level of accuracy. The larger the dataset and the more complex the network, the better the network can generalize and make accurate predictions.

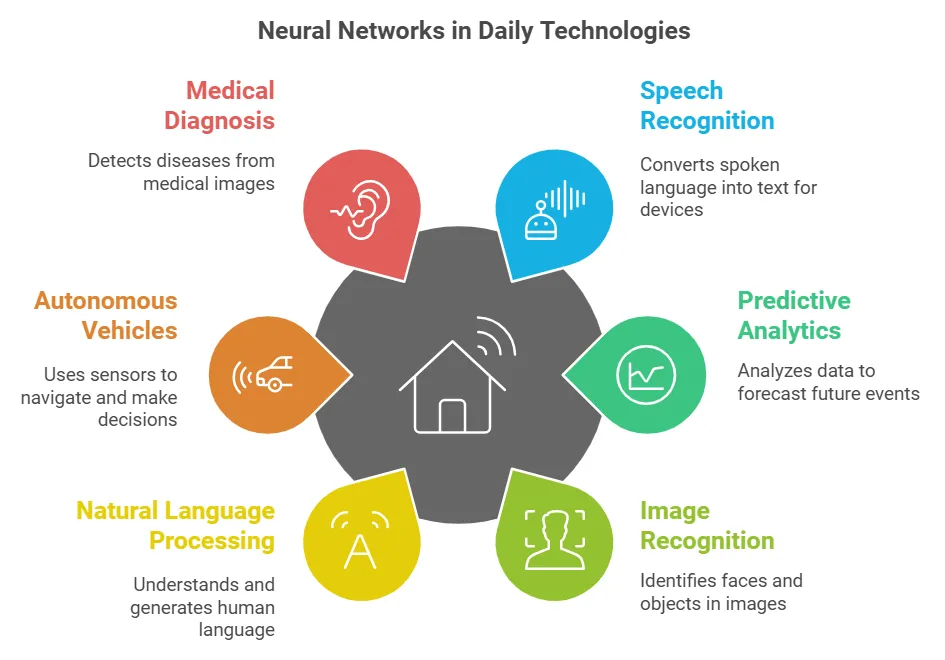

Real World Applications of Neural Networks

Neural networks power many of the technologies we use daily. Here are some notable applications:

1. Speech Recognition

Neural networks have made revolution in speech recognition systems, which enables devices like Google Assistant, Alexa and Siri to understand spoken language. They convert audio waves into text, analyze it and provide relevant responses.

Case Study: Google Assistant uses a combination of RNNs and CNNs to transcribe speech and recognize commands with high accuracy.

2. Predictive Analytics

Neural networks are widely used for predictive analytics in finance, healthcare and retail. They analyze historical data to make predictions about future events, such as stock prices, patient outcomes, or sales trends.

Case Study: Banks use neural networks to predict loan defaults and identify fraudulent transactions, improving security and risk management.

3. Image and Facial Recognition

Tasks like unlocking phones with facial recognition or tagging friends in social media photos, use neural networks for image analysis. CNNs, in particular, are used to identify faces, objects and patterns in images.

Example: Facebook uses neural networks to suggest tags for people in uploaded photos, to make photo sharing more seamless.

4. Natural Language Processing (NLP)

NLP applications like sentiment analysis, translation and chatbot development rely on neural networks. They help machines to understand and generate human language, which makes it possible to analyze social media sentiment or translate languages instantly.

Case Study: Google Translate uses deep neural networks to translate text between languages by understanding context and sentence structure.

5. Autonomous Vehicles using Computer Vision

Self driving cars are one of the most exciting applications of neural networks. They use sensors, cameras and deep learning models to interpret surroundings, identify objects and make decisions in real time.

Example: Tesla’s Autopilot uses CNNs to process video footage from cameras and make decisions about steering, braking and lane changes.

6. Medical Diagnosis

In healthcare, neural networks assist in diagnosing diseases by analyzing medical images, lab test results and patient data. They are specially useful in detecting diseases early by recognizing hidden patterns that may go unnoticed by humans.

Example: Neural networks are used to detect signs of cancer in X-rays or MRI scans, providing faster and more accurate diagnoses.

Challenges and Limitations of Neural Networks

Although neural networks are powerful, they have their limitations:

– Data Requirements: Neural networks require large datasets to learn effectively. This can be a challenge in areas where data is scarce or privacy concerns limit data collection.

– Computational Power: Training large neural networks requires sizable computational resources, which requires specialized hardware like GPUs.

– Interpretability: Neural networks operate such that it i’s difficult to understand how they arrive at certain decisions. This can be a concern in specific applications like healthcare.

– Risk of Overfitting: Neural networks can sometimes memorize data instead of generalizing from it. This leads to overfitting where the model performs well on training data but not suitable on new data.

– Ethical Concerns: Applications like facial recognition and deepfakes raise ethical concerns regarding privacy and misuse of technology.

Conclusion

Artificial neural networks are at the center of modern machine learning and AI. By copying the structure and function of the human brain, they can process large amounts of data and make complex predictions. From speech recognition to self driving cars, neural networks are shaping the future of technology.

As we continue to develop more advanced neural networks, their impact will grow abundantly. For anyone interested in AI or machine learning, by understanding the fundamentals, you can start exploring how these powerful tools can be applied to solve real world problems.