Self driving technology is transforming the way we think about transportation, which enables us for safer and more efficient travel. Main contributor to autonomous vehicles (AVs) is computer vision, a field of artificial intelligence (AI) that enables machines to interpret and respond to visual data.

In this post, we shall learn How does self driving technology and computer vision work? We shall break down how computer vision helps self driving cars to navigate complex environments, detect obstacles and make real time decisions. We shall elaborate on self driving technology and computer vision.

We shall also explore key components like object detection, lane detection and obstacle avoidance, along with case studies of leading companies in the field, such as Tesla and Waymo. Finally, we shall discuss the challenges, ethical implications and future of self driving technology.

What is Computer Vision in Self Driving Technology?

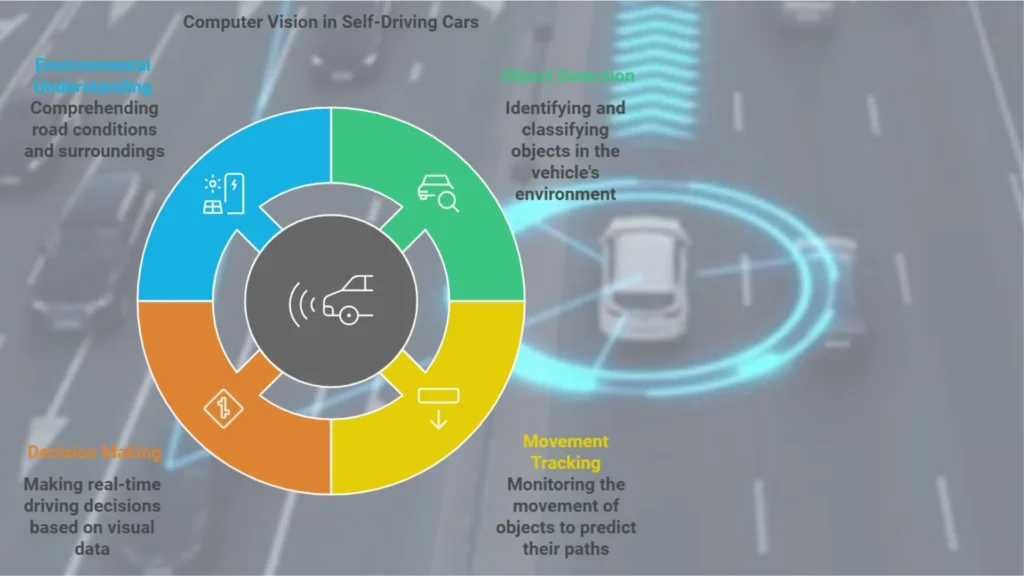

Computer vision allows self driving cars to “see” and interpret their surroundings. Using a combination of cameras, sensors and algorithms, AVs can analyze visual data, detect objects, track movement and make split second decisions. This technology is important for enabling vehicles to drive autonomously by understanding and responding to their environment.

Computer vision in self driving cars relies on advanced algorithms, including deep learning models that mimic human vision processes. These models process image data captured by cameras around the car and help it interpret the road, detect other vehicles, pedestrians, signs and obstacles and respond appropriately.

Key Components of Computer Vision in Self Driving Cars

Computer vision in AVs consists of several important components. Each plays a role in ensuring the car can navigate safely and respond accurately to its environment.

1. Object Detection

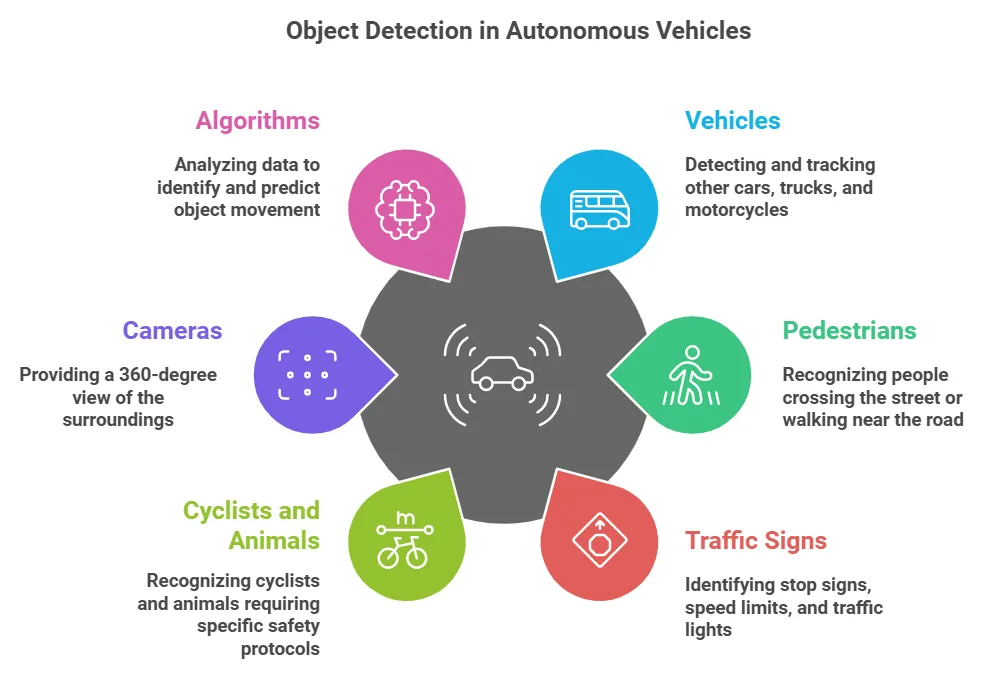

Object detection enables AVs to identify various objects on the road, such as other cars, pedestrians, bicycles, animals and obstacles. By recognizing and classifying objects, self driving cars can avoid collisions and adjust their speed or direction accordingly.

Classification of objects in their environment are:

– Vehicles: Detecting and tracking other cars, trucks and motorcycles.

– Pedestrians: Recognizing people crossing the street or walking near the road.

– Traffic Signs and Signals: Identifying stop signs, speed limits and traffic lights.

– Cyclists and Animals: Recognizing cyclists and animals, which require specific safety protocols.

Self driving cars use multiple cameras to create a 360 degree view of their surroundings. Algorithms analyze this data to identify each object in the car’s vicinity, assessing factors like speed, size and movement. For instance, when a pedestrian crosses the street, the vehicle’s object detection system identifies the person and predicts their path, to adjust the car’s speed or stop if necessary.

2. Lane Detection

Lane detection helps self driving cars to stay within their lanes by identifying lane markings on the road. This is important for lane keeping assistance and safe driving, especially on highways or multi lane roads. Lane detection algorithms analyze the position of lane lines, even in difficult situations where markings might be faint or covered by dirt.

This process involves:

– Edge Detection: Detecting the edges of the road and lane markings.

– Perspective Transformation: Converting the camera’s perspective to a bird’s eye view, making it easier to identify lanes.

– Line Fitting: Drawing lines that represent the detected lanes and guiding the car’s trajectory.

Self driving cars use a combination of cameras and sensors to detect lane lines and measure the car’s position within them. Lane detection also helps AVs to navigate lane changes, merges and turns safely by monitoring nearby vehicles and adjusting the car’s position as needed.

3. Obstacle Avoidance

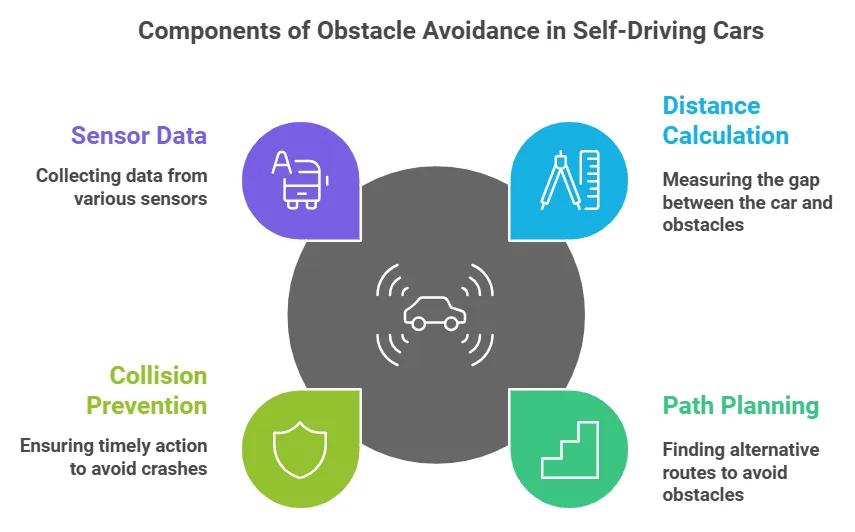

Obstacle avoidance is essential to prevent accidents. It enables self driving cars to detect and react to unexpected obstacles in their path, such as fallen objects, sudden stops by other vehicles, or erratic movements of cyclists and pedestrians.

Key aspects of obstacle avoidance include:

– Distance Calculation: Measuring the distance between the car and the obstacle using sensor data.

– Path Planning: Determining an alternative route to avoid the obstacle.

– Collision Prevention: Using predictive algorithms to ensure there’s enough time to slow down or stop.

Obstacle detection combines data from computer vision with other sensors, like lidar and radar, to assess the distance and speed of objects around the car. When an obstacle is detected, the car’s system calculates the safest course of action, like slowing down, changing lanes or coming to a stop.

How Self Driving Cars Interpret and Respond to Their Surroundings

To understand and respond to complex environments, self driving cars rely on a combination of data sources:

1. Cameras: These are the primary source of visual data, which capture images that allow the car to detect objects, lanes and signs.

2. Lidar (Light Detection and Ranging): Lidar sensors use lasers to create 3D maps of the car’s surroundings, which provides precise distance measurements.

3. Radar: Radar sensors detect objects’ speed and distance, which helps the car to track nearby vehicles even in poor weather conditions.

4. Ultrasonic Sensors: Typically used for close range detection, such as in parking assistance.

Self driving cars rely on a fusion of data from all above sensors to build a 3D map of their surroundings. This sensor fusion allows the vehicle to detect, classify and track objects, identify lane markings and monitor other road features. The process works as follows:

1. Data Collection: The sensors collect visual and spatial data from the environment.

2. Object and Lane Detection: The computer vision system detects and classifies objects and lanes based on the data.

3. Path Planning: The vehicle calculates the safest and most efficient path to its destination, taking obstacles into account.

4. Decision-Making: The system makes real-time decisions, such as when to stop, change lanes, or slow down.

5. Control Execution: Finally, the car’s control system executes the necessary maneuvers, such as steering or braking.

These steps happen continuously and in real time, which enables the car to respond to changes in its environment within milliseconds.

Real World Case Studies: Tesla and Waymo

Tesla’s Autopilot system is one of the most well known applications of self driving technology. Tesla relies heavily on cameras and radar for object detection and lane tracking, by using its proprietary “Tesla Vision” system. Unlike many companies that use lidar, Tesla has chosen to focus on vision based technology, believing that this approach will allow for more scalable and cost effective autonomous driving.

– Autopilot and Full Self-Driving (FSD): Tesla’s Autopilot and FSD features offer lane centering, adaptive cruise control, self parking and more. These systems use neural networks that process visual data from cameras to detect and interpret objects in real time.

– Challenges: Tesla’s camera based approach has raised concerns about its ability to perform reliably in poor visibility conditions, such as fog or heavy rain. Tesla has also faced criticism for labeling its system “Full Self Driving,” as it still requires human supervision.

Waymo, a subsidiary of Alphabet, is another leader in self driving technology. Unlike Tesla, Waymo uses lidar extensively, along with radar and cameras, to create a highly detailed map of its surroundings. Waymo’s autonomous driving technology, known as the Waymo Driver, is widely regarded for its high level of accuracy and reliability.

– Comprehensive Sensor Suite: Waymo’s cars are equipped with lidar, radar and high resolution cameras, which provides a strong perception system that allows the car to detect objects even in challenging conditions.

– Pilot Programs: Waymo operates fully autonomous ride hailing services in Phoenix, Arizona and San Francisco, where riders can use driverless cars without any human operator.

– Challenges: Despite its advancements, Waymo faces difficulties in scaling its technology to different cities and handling complex urban environments with high traffic and unpredictable pedestrian behavior.

Challenges and Ethical Implications of Self Driving Car Technology

1. Technical Challenges

Self driving technology is still evolving and several technical challenges remain:

– Weather Conditions: Rain, fog and snow can interfere with camera and sensor accuracy, which makes it difficult for the car to interpret its surroundings.

– Complex Urban Environments: Heavy traffic, cyclists, pedestrians and unexpected obstacles make city driving particularly challenging for AVs.

– Data Processing and Latency: Self driving cars process enormous amounts of data in real time and even slight delays could affect the vehicle’s response time and safety.

2. Ethical Considerations

As AV technology advances, ethical concerns also arise, particularly in the context of decision making and privacy.

– Decision Making in Accidents: Self driving cars must be programmed to make split second decisions in emergencies, which raises questions about how to prioritize human lives in potential accident scenarios. For example, should an AV prioritize its passengers’ safety over pedestrians’?

– Privacy: AVs collect vast amounts of data about their surroundings, which can include images of people and places. Hence to ensure user privacy and data protection has become crucial as self driving technology becomes more widespread.

– Job Impact: Widespread adoption of autonomous vehicles could lead to significant job displacement in the transportation industry, particularly for truck drivers, taxi drivers and delivery personnel.

3. Regulatory and Legal Challenges

Regulation is essential for the safe deployment of self-driving cars, but the legal framework around AVs is still developing. Questions about liability in case of accidents, insurance policies and safety standards all need to be addressed before AVs can become mainstream.

The Future of Self Driving Technology

The future of self driving cars looks promising, with potential applications that could transform transportation, reduce traffic accidents and improve mobility for people with disabilities. However, achieving full autonomy (Level 5) remains a significant technical challenge and it will likely take years before AVs can operate safely without any human intervention. Here are some trends to watch:

– Increased Use of AI and Deep Learning: As AI algorithms become more sophisticated, self driving cars will gain a better understanding of their surroundings, improving safety and efficiency.

– Enhanced Sensor Fusion: Future self driving cars will likely use more advanced combinations of sensors to enhance accuracy, especially in complex driving conditions.

– Stronger Regulations: Governments and regulatory bodies are expected to implement stricter standards and ethical guidelines for autonomous vehicles to ensure public safety.

Predictions: Experts predict that fully autonomous vehicles will first become common in controlled environments, such as highways and designated urban areas, before expanding to more complex environments.

Conclusion

Computer vision is the fundamental of self driving technology, which enables vehicles to interpret and respond to their surroundings with remarkable precision. By integrating object detection, lane detection and obstacle avoidance, AVs are becoming increasingly capable of navigating complex environments. However, significant technical, ethical and regulatory challenges remain.

As companies like Tesla and Waymo continue to advance self driving technology, the road to a future with fully autonomous vehicles is exciting and complex. Understanding the mechanics and difficulties of computer vision in self driving cars helps us to appreciate the potential of this transformative technology, and also the work still needed to make it a reality.

With continued innovation and collaboration, self driving cars hold the promise of safer, more efficient transportation for all.