Deep learning is revolutionizing how machines interpret complex data. From powering voice assistants to enabling self-driving cars, deep learning has become one of the most transformative fields in artificial intelligence (AI). But what is deep learning exactly and how does deep learning work?

In this post, we shall explain the mechanisms of deep learning, how it differ from traditional machine learning, also explore popular deep learning architectures and look at some of its real world applications.

What Is Deep Learning?

Deep learning is a subset of machine learning that mimics the human brain’s ability to recognize patterns and make decisions. While traditional machine learning models often rely on structured data and manual feature engineering, deep learning uses artificial neural networks to automatically extract features from data. This makes it especially powerful for handling unstructured data, like images, audio and text.

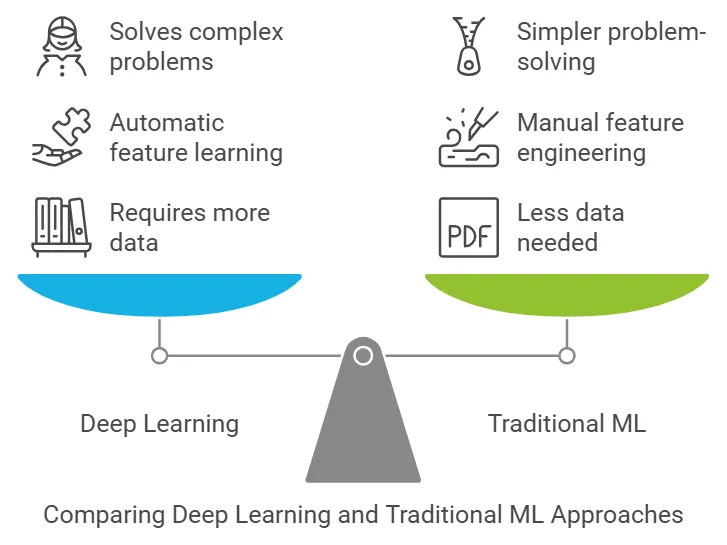

How Deep Learning Differs from Traditional Machine Learning

– Data Requirements: Deep learning models generally require more data than traditional machine learning models. The larger the dataset, the better the deep learning model performs.

– Feature Engineering: Unlike traditional models, deep learning models automatically learn features from data, hence there is no need for extensive manual feature engineering.

– Complexity: Deep learning can solve more complex problems that are difficult to tackle with traditional machine learning, such as image and speech recognition.

How Neural Networks Work

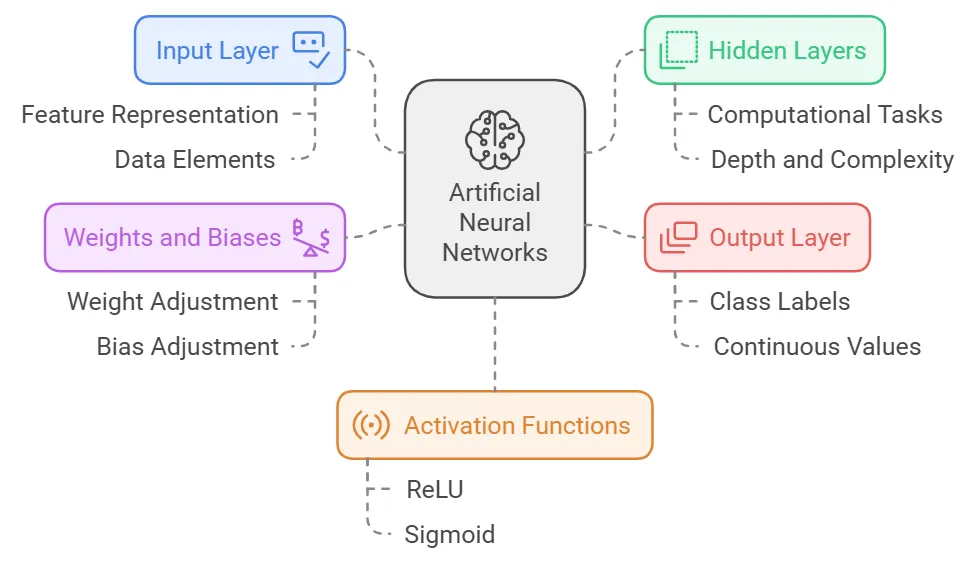

At the heart of deep learning are artificial neural networks (ANNs), which are inspired by the structure of the human brain. A neural network consists of layers of nodes (or “neurons”) that process data. Each layer of neurons learns a different set of features from the input data, gradually transforming raw data into meaningful patterns.

1. Input Layer: This layer receives the initial data. Each neuron in the input layer represents a feature or element of the data (e.g., pixels in an image, words in a sentence).

2. Hidden Layers: These layers perform most of the computations. Deep neural networks can have dozens or even hundreds of hidden layers, each one transforming the data in a way that allows the network to recognize complex patterns.

3. Output Layer: This layer produces the final output, which could be a class label (like “cat” or “dog” in an image recognition task) or a continuous value (like a predicted price).

4. Weights and Biases: Weights determine the importance of each input feature, while biases help adjust the output along with the weights. During training, the model adjusts these values to minimize errors.

5. Activation Functions: Each neuron uses an activation function to determine whether it should be “activated” based on the weighted sum of inputs. Common activation functions include ReLU (Rectified Linear Unit) and Sigmoid, which add non linearity to the network, enabling it to learn more complex patterns.

Each connection between neurons has a weight and each neuron has an activation function, which determines how much signal it should pass to the next layer. The network adjusts these weights through a process called back propagation to minimize errors, which gradually learns to make accurate predictions.

Popular Deep Learning Architectures

Deep learning has evolved to include specialized architectures suitable to different types of data and tasks. Here are three of the most popular deep learning architectures:

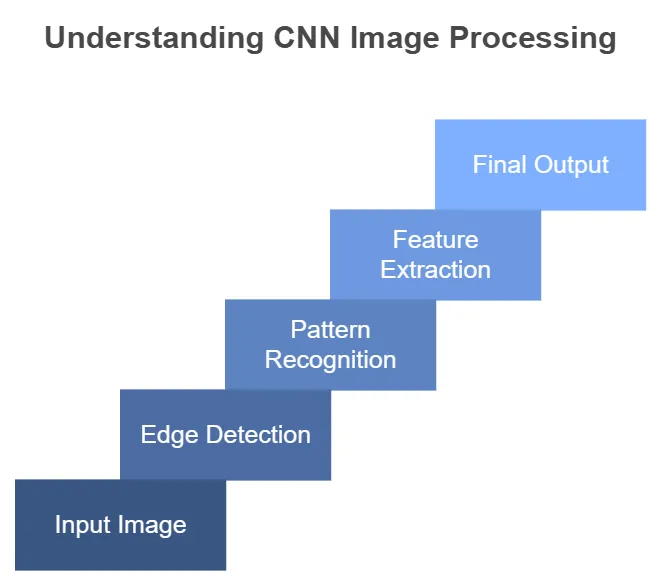

1. Convolutional Neural Networks (CNNs)

Convolutional Neural Networks (CNNs) are primarily used for image processing and are highly effective for tasks like image classification and object detection. CNNs apply a series of filters to the input image, which allows the network to detect spatial features, such as edges, textures and shapes.

Use Cases:

– Image Recognition: CNNs power applications like facial recognition in smartphones.

– Object Detection: Detecting and localizing objects within an image, useful in applications like autonomous driving and surveillance.

– Medical Imaging: Analyzing X-rays or MRIs to detect anomalies, like tumors or fractures.

2. Recurrent Neural Networks (RNNs)

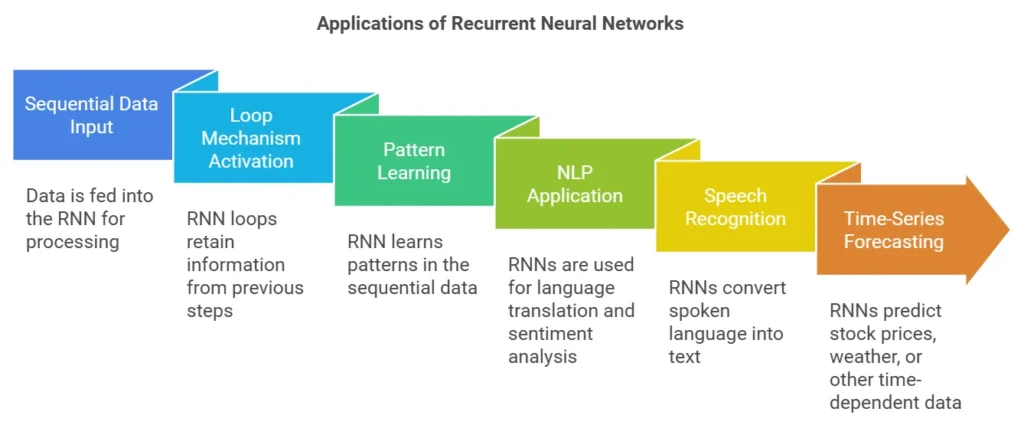

Recurrent Neural Networks (RNNs) are designed for sequential data, making them ideal for tasks like language modeling and time series prediction. RNNs use loops in the network to retain information from previous steps, which enables them to learn patterns in sequences.

Use Cases:

– Natural Language Processing (NLP): RNNs are used for language translation and sentiment analysis.

– Speech Recognition: Systems like Google’s Voice Search use RNNs to convert spoken language into text.

– Time Series Forecasting: Predicting stock prices, weather, or any other data that changes over time.

A limitation of standard RNNs is that they struggle with long term dependencies, where past information is needed to inform current predictions. Long Short-Term Memory (LSTM) networks and Gated Recurrent Units (GRUs) are two types of RNNs that address this problem by adding mechanisms to “remember” information over extended sequences.

3. Generative Adversarial Networks (GANs)

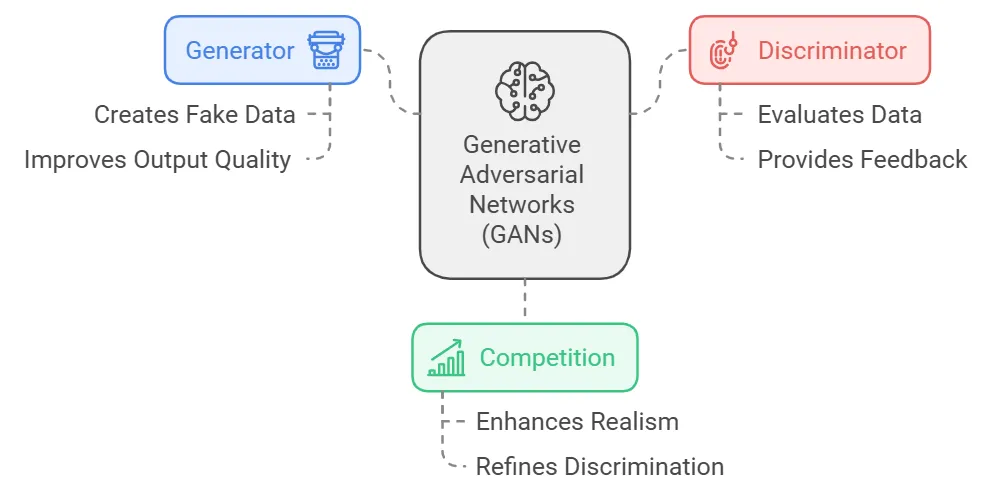

Generative Adversarial Networks (GANs) are a unique type of deep learning model that consist of two neural networks: a generator and a discriminator. The generator creates fake data, while the discriminator tries to distinguish between real and fake data. This competition drives the generator to produce increasingly realistic outputs.

Use Cases:

– Image Generation: GANs can generate realistic images, such as human faces or artworks.

– Data Augmentation: GANs create synthetic data for training other models, especially in fields where collecting data is expensive or challenging, like medical imaging.

– Style Transfer: Converting images from one style to another, such as turning a photo into a painting in the style of Van Gogh.

Real World Applications of Deep Learning

Deep learning has made significant contributions across multiple industries. Here are some prominent examples of how it is being used today:

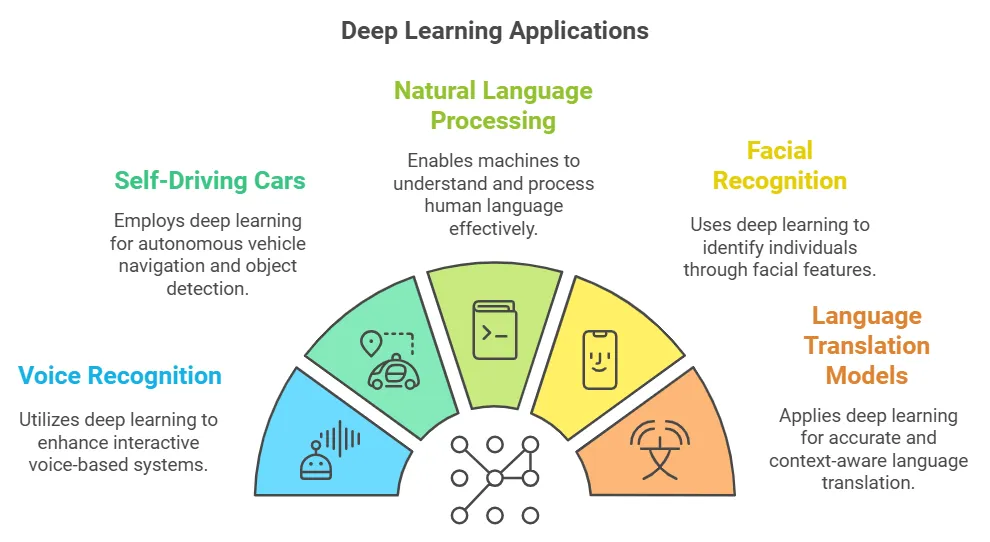

1. Voice Recognition

Voice activated assistants like Alexa, Siri and Google Assistant rely on deep learning for voice recognition. By analyzing the patterns in audio data, deep learning models can accurately understand and process spoken commands, to make voice based interactions more intuitive.

– Example: Amazon’s Alexa uses deep learning to distinguish between different voices in a household, which provides personalized responses.

2. Self Driving Cars

Self driving cars use deep learning to interpret data from sensors, cameras and radar, which helps the vehicle understand its surroundings. Convolutional neural networks (CNNs) process visual data to identify objects, while reinforcement learning is often used for navigation.

– Example: Tesla’s self driving cars use a combination of deep learning techniques to detect pedestrians, other vehicles and road signs, which allows them to drive autonomously in various environments.

3. Natural Language Processing (NLP)

Natural language processing (NLP) allows machines to understand human language. Deep learning has made it possible for applications to perform tasks like translation, sentiment analysis and even question answering.

– Example: Google Translate uses deep learning models to translate between languages more accurately by capturing context and cultural nuances.

4. Facial Recognition

Facial recognition technology uses CNNs to analyze facial features and identify individuals. This technology is used in smartphones for security, in social media for tagging photos and even in law enforcement for identification.

– Example: Apple’s Face ID technology uses facial recognition to unlock iPhones by analyzing the unique features of the user’s face.

5. Language Translation Models

Translation models, like those used in Google Translate, employ deep learning to understand and translate languages. These models rely on vast amounts of data from different languages, which captures both words and context to provide accurate translations.

– Example: Google’s Transformer model, a deep learning model architecture, is widely used for language translation and has been important to the success of Google Translate.

Key Takeaways and Future of Deep Learning

Deep learning continues to evolve and its applications are only expanding. Here is a quick summary of its key points:

– Deep Learning Basics: A subset of machine learning focused on using neural networks to learn from data.

– Key Architectures:

– CNNs for image data

– RNNs for sequential data

– GANs for generating new, realistic data

– Applications: Used in industries ranging from healthcare to retail, with applications in voice recognition, selfdriving cars, NLP and more.

Conclusion:

As computing power increases, so does the potential of deep learning. With advancements in hardware like GPUs and specialized AI chips, deep learning models can process more data and perform more complex tasks. The future of deep learning looks promising, with expected breakthroughs in areas like personalized medicine, advanced robotics and even AI ethics.

Deep learning is reshaping the world, enabling machines to perform tasks that once seemed impossible.

By understanding the mechanisms and architectures behind deep learning, we can appreciate its potential and its impact on industries, our daily lives and the future of AI.